© 2023 borui. All rights reserved.

This content may be freely reproduced, displayed, modified, or distributed with proper attribution to borui and a link to the article:

borui(2023-12-16 00:01:26 +0000). softmax function. https://borui/blog/2023-12-16-en-softmax-function.

@misc{

borui2023,

author = {borui},

title = {softmax function},

year = {2023},

publisher = {borui's blog},

journal = {borui's blog},

url={https://borui/blog/2023-12-16-en-softmax-function}

}what does it do

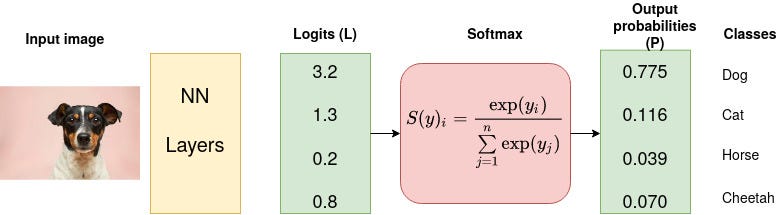

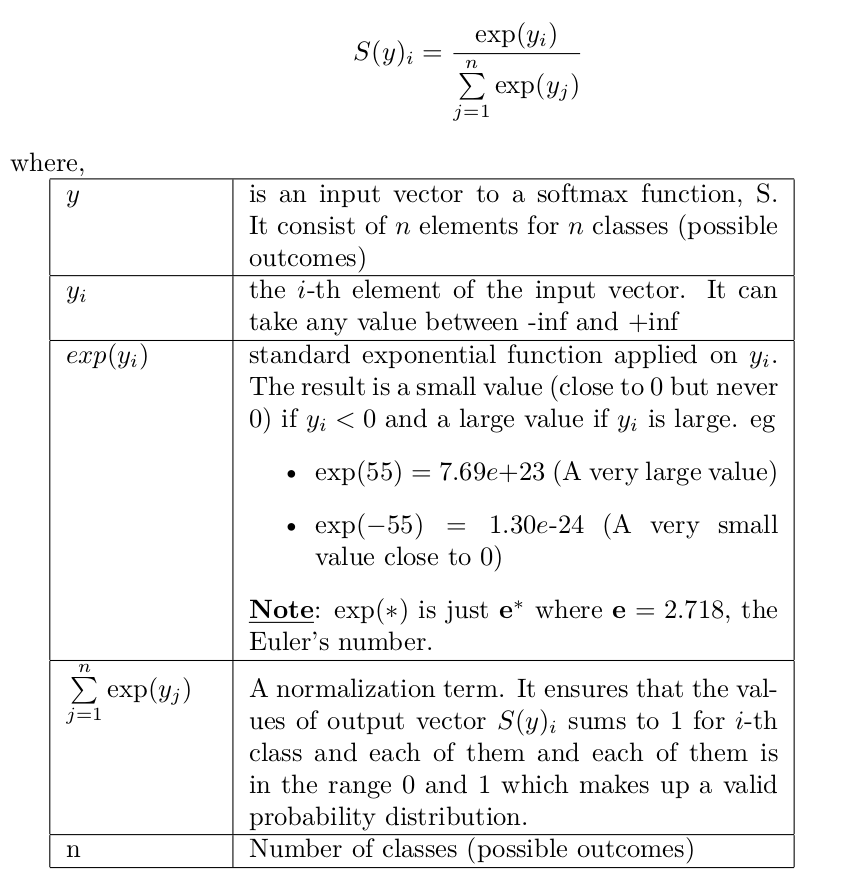

The softmax function, also known as softargmax or normalized exponential function converts a vector of K real numbers into a probability distribution of K possible outcomes.

how is it caculated

things to care about in implementation

If input value maight be large then e^x might exceeds the size limit of int. One simple way to sovle it is to let each x equals x minus the maximum of input numbers.

softmax vs argmax

Limitations of the Argmax Function

The argmax function returns the index of the maximum value in the input array.

e.g. argmax( [0.25, 1.23, -0.8] ) -> [0,1,0]

One limitation with using the argmax function is that its gradients with respect to the raw outputs of the neural networks are always zero. As you know, it’s the backpropagation of gradients that facilitates the learning process in neural networks.

benefits of softmax

From the plot of e^x, you can see that, regardless of whether the input x is positive, negative, or zero, e^x is always a positive number.

Let’s apply the softmax formula on the vector z, using the steps below:

- Calculate the exponent of each entry.

- Divide the result of step 1 by the sum of the exponents of all entries.

Why use something math-heavy as the softmax activation? Can we not just divide each of the output values by the sum of all outputs?

Well, let’s try to answer this by taking a few examples.

Use the following function to return the array normalized by the sum.

def div_by_sum(z):

sum_z = np.sum(z)

out_z = np.round(z/sum_z,3)

return out_zConsider z1 = [0.25, 1.23, -0.8], and call the function div_by_sum. In this case, though the entries in the returned array sum up to 1, it has both positive and negative values. We still aren’t able to interpret the entries as probability scores.

z1 = [0.25,1.23,-0.8] div_by_sum(z1) array([ 0.368, 1.809, -1.176])

Let z2 = [-0.25, 1, -0.75]. In this case, all elements in the vector sum up to zero, so the denominator will always be 0. When you divide by the sum to normalize, you’ll face runtime warnings, as division by zero is not defined.

z2 = [-0.25,1,-0.75] div_by_sum(z2) RuntimeWarning: divide by zero encountered in true_divide array([-inf, inf, -inf])

In this example, z4 = [0, 0.9, 0.1]. Let’s check both the softmax and normalized scores.

z4 = [0,0.9,0.1] print(div_by_sum(z4)) print(softmax(z4)) [0. 0.9 0.1] # normalized [0.219 0.539 0.242] # softmax

Having zeros in deep learning is bad because itback propogation won't be able to learn any thing from value 0, and in other words the weights won't be able to update from 0 values.

In essence, the softmax activation can be perceived as a smooth approximation to the argmax function.

Equivalence of the Sigmoid, Softmax Activations for N = 2

Sigmoid and softmax activations are equivalent for binary classification for binary classification task.

loss function

Like other classification task, it usually use cross-entropy loss function.

reference

-

Kiprono Elijah Koech. (Sep 30, 2020). Softmax Activation Function — How It Actually Works [Blog post]. Towards Data Science. Retrieved from https://towardsdatascience.com/softmax-activation-function-how-it-actually-works-d292d335bd78

-

Bala Priya C. (Jun 30, 2023). Softmax Activation Function: Everything You Need to Know [Blog post]. Pinecone. Retrieved from https://www.pinecone.io/learn/softmax-activation/

-

Softmax function. (12 February 2024). In Wikipedia. Retrieved Mar 15, 2024, from https://en.wikipedia.org/wiki/Multi-agent_system

further reading

-

PP鲁. (Aug 05, 2020). 三分钟读懂Softmax函数. 知乎专栏. [Blog post]. Retrieved from https://zhuanlan.zhihu.com/p/168562182

-

触摸壹缕阳光. (Feb 10, 2020). 一文详解Softmax函数. 知乎专栏. [Blog post]. Retrieved from https://zhuanlan.zhihu.com/p/105722023